.svg)

The Three Waves of GenAI Adoption

Scaling a GenAI team is like preparing an orchestra for a grand symphony. You don’t just add more musicians randomly. You need the right instruments, the right sections, and a conductor who knows exactly when each player should come in. Without this harmony, the performance falls flat, no matter how talented the individual musicians are. Similarly, scaling Generative AI (GenAI) engineering teams requires more than just increasing headcount. It demands precise alignment between your team’s structure, skills, and the maturity of your AI initiatives.

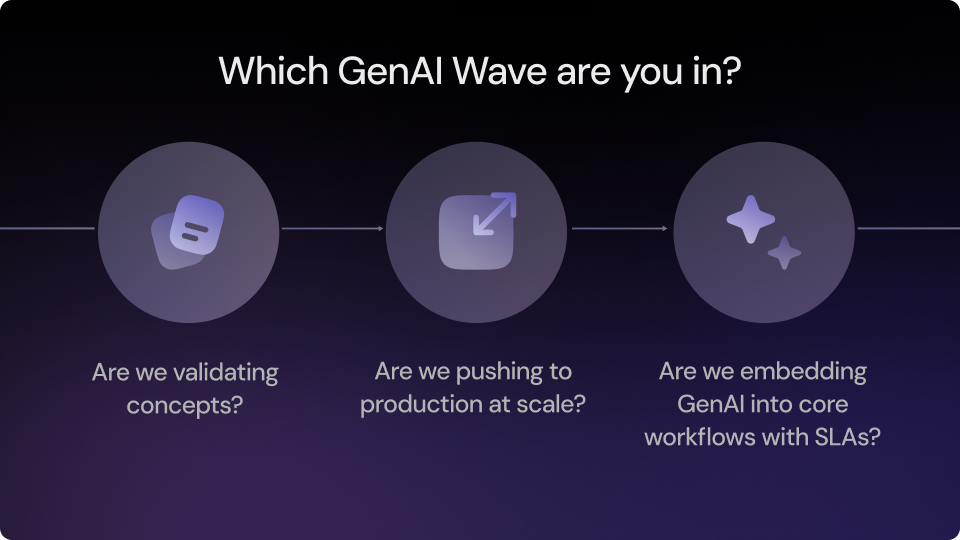

In this blog, we’ll map the three waves of GenAI adoption namely prototyping, productization, and embedding while explaining why scaling for the wrong wave is the biggest pitfall companies face. Understanding where you are on this curve is crucial to building a team that delivers real business impact.

Wave 1: Sandbox to Signal

What it looks like:

At this stage, teams experiment with GPT-powered Slackbots, internal document summarizers, or basic ChatGPT wrappers. The tech stack is lightweight with LangChain, OpenAI APIs, Streamlit, Hugging Face Transformers. The team typically consists of one or two generalist ML or backend engineers, sometimes supported by a data scientist exploring fine tuning.

The challenge:

These projects are sandbox experiments aimed at quick validation, not production stability. The common mistake is pushing these prototypes into production without the necessary infrastructure.

Hiring trap:

Many companies respond by hiring more generalists to “scale” the project, but what’s really needed is the first MLOps or LLMOps engineer to build stability and operational foundations.

Wave 2: Scaling without breaking

GenAI moves from experimentation to production-grade applications. Use cases include customer support agents powered by retrieval-augmented generation (RAG), SEO content generation engines, AI workflows for lead scoring, and large-scale document summarization.

The tech stack includes Vector databases (Weaviate, Pinecone, Qdrant), finetuning with LoRA/QLoRA, prompt management tools (LangSmith, PromptLayer), model versioning (MLflow, BentoML), API gateways, load testing, and cost monitoring.

The challenge:

This is where many teams hit a wall. The initial POC shows promise, but the stack is fragile, undocumented, and costly. RAG pipelines hallucinate under load, APIs timeout, and support teams scramble to fix live AI bugs.

Hiring trap:

Scaling with generalists here leads to velocity loss. The team needs specialists of LLMOps engineers for infrastructure, prompt engineers who think like product owners, backend engineers skilled in async architectures and inference optimization, and QA roles focused on GenAI output evaluation.

Wave 3: Embedded AI as a core product

What it looks like:

GenAI becomes core to the product itself. Use cases include AI-generated compliance reports, document parsing, real-time personalization, automated content generation, smart inventory forecasting, multimodal search, and AI customer service agents with reasoning and escalation.

The Tech stack includes hybrid models combining LLMs and traditional ML, event-driven architectures, CI/CD pipelines for ML (Tecton, Featureform, Arize), Kubernetes with GPU-aware scaling, and compliance logging with audit trails.

The challenge:

Operating in a high-stakes environment where model drift, latency, or hallucinations can cause regulatory penalties or revenue loss. The team structure must evolve into multiple small, cross-functional squads with embedded ML engineers, product managers, and infrastructure specialists, supported by clear SLAs and tight feedback loops.

Hiring trap:

Many companies still try to “hire a few more AI engineers” instead of re-architecting team topology to include platform engineering, observability tooling, and QA frameworks purpose-built for GenAI.

Why Most Teams Are Structured for the Wrong Wave

Many organizations claim to be “moving fast with GenAI” but remain stuck in Wave 1 team structures while attempting Wave 2 or 3 outcomes. The root cause? Hiring for prototypes when the business demands production-grade products. If you’re scaling with generalists and part-time engineers while expecting product-level reliability, you’re misaligned.

Before you break out of this misalignment, you need to know where you stand.

Scale for the Wave You’re In

The real edge in GenAI comes from the speed in which you deliver, not just the model use. The teams that win are those who align their structure, hiring, and delivery model to their current maturity stage by building squads that ship weekly, evaluate continuously, and embed AI deeply into products.

Are you conducting a symphony or just adding more musicians? The future of GenAI depends on scaling smart, not just fast.

For more insights and detailed playbooks on building and scaling GenAI teams effectively, explore other blogs from Soul AI Pods

Looking to Hire?

Start Hiring

Download Brochure

Fill in your details to request for brochure

Oops! Something went wrong while submitting the form.